Achieving the Qlik TBDCDTS Certification is a significant step for any developer looking to validate their expertise in Big Data and the powerful Talend Studio platform. The official name, "Qlik Talend Big Data Developer Using Talend Studio," points to a comprehensive exam designed to test a wide array of skills, from foundational Big Data concepts to advanced Spark and streaming techniques. This certification proves to employers and colleagues that you can design, implement, and manage robust Big Data solutions using Qlik's powerful suite.

The TBDCDTS exam is a challenging but achievable goal, comprising 55 questions over 90 minutes. You’ll need a passing score of 70% to earn your certification. With a focused study plan and a deep understanding of the exam's structure, you can confidently approach the test. This detailed blueprint breaks down the nine essential domains of the TBDCDTS syllabus, offering actionable study strategies, real-world use cases, and sample questions to help you prepare effectively. By following this guide, you’ll not only prepare for the TBDCDTS questions but also build a solid, practical skill set that extends far beyond the exam room.

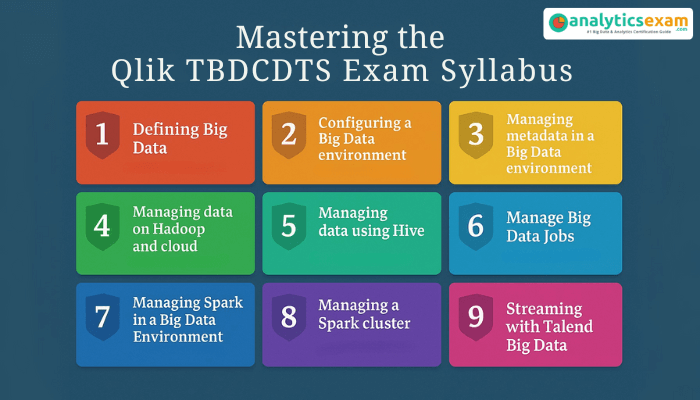

The Qlik TBDCDTS Certification Syllabus at a Glance

The TBDCDTS exam blueprint is meticulously organized into nine domains, each covering a specific area of Big Data development with Talend Studio. We'll explore each domain in detail, providing context and practical advice. The key to a successful study plan is to allocate your time wisely, focusing on the areas where you need the most improvement while reinforcing your existing knowledge.

Here’s a breakdown of the nine domains that form the foundation of your TBDCDTS practice exam preparation:

-

Defining Big Data: Understanding the core concepts and technologies.

-

Configuring a Big Data environment: Setting up the necessary tools and connections.

-

Managing metadata in a Big Data environment: Handling data schemas and repositories.

-

Managing data on Hadoop and cloud: Storing and retrieving data from distributed systems.

-

Managing data using Hive: Working with SQL-like queries on massive datasets.

-

Manage Big Data Jobs: Orchestrating and monitoring data processes.

-

Managing Spark in a Big Data Environment: Using Spark for high-speed processing.

-

Managing a Spark cluster: Configuring and optimizing Spark environments.

-

Streaming with Talend Big Data: Handling real-time data flows.

Domains 1-3: The Foundational Pillar of Qlik Big Data

These first three domains establish the theoretical and practical groundwork for your entire Big Data journey with Talend Studio. Think of them as the building blocks; a solid understanding here will make the later, more complex topics much easier to grasp.

Domain 1: Defining Big Data

This domain covers the fundamental principles of Big Data. It's not just about knowing what Big Data is, but also understanding the "why" and "how." You'll need to be familiar with key concepts like the 3 Vs (Volume, Velocity, and Variety), and the core technologies that enable it, such as Hadoop and NoSQL databases. The exam expects you to differentiate between various Big Data types (structured, semi-structured, and unstructured) and know when to apply which technology.

-

Real-world Use Case: Imagine a retail company that needs to analyze customer purchase history, social media sentiment, and website clickstream data to predict future buying trends. A Big Data developer must understand that this scenario involves all three Vs and requires a unified platform like Hadoop to process the diverse data types.

-

Study Tip: Focus on the theoretical distinctions between different types of Big Data technologies (e.g., Hive vs. HBase) and the use cases where each excels. Create a mental map of the Big Data ecosystem.

Domain 2: Configuring a Big Data Environment

This domain is highly practical and focuses on setting up a working environment. You'll be tested on your ability to configure connections to different Big Data sources, including Hadoop distributions like Cloudera or Hortonworks, as well as cloud platforms like AWS, Azure, or Google Cloud. Key topics include configuring connection properties, managing security, and using Talend Studio's connection wizards.

-

Real-world Use Case: A data team needs to set up a new project to ingest data from an on-premise Hadoop cluster and process it using a Talend job. A developer's first task is to configure the Hadoop connection in Talend Studio, specifying the NameNode and JobTracker URLs, along with authentication details.

-

Study Tip: Practice setting up connections to different environments in Talend Studio. Use a free cloud tier (e.g., AWS Free Tier) to get hands-on experience with configuring connections to S3 or a hosted Hadoop service.

Domain 3: Managing Metadata in a Big Data Environment

Metadata is the "data about data," and it's crucial for understanding and managing complex Big Data projects. This domain covers how to define, manage, and share metadata within Talend Studio. You'll need to know how to create repository schemas, import schemas from different sources (e.g., Hive tables), and use them consistently across multiple jobs.

-

Real-world Use Case: A company has a set of data from multiple sources - a database, a CSV file, and a cloud-based file store. A developer must create a single, unified schema in the Talend repository to represent this data. This metadata can then be reused in various jobs, ensuring data consistency and saving development time.

-

Study Tip: Spend time working with the Repository in Talend Studio. Practice creating different types of metadata, such as file-delimited schemas and database schemas, and then drag-and-drop them into a job to see how they are used.

Domains 4-6: Data Management and Job Orchestration

Once you've mastered the fundamentals, these domains dive into the practical application of Big Data technologies. This is where you’ll learn to manipulate data on various platforms and manage the jobs that perform these operations.

Domain 4: Managing Data on Hadoop and Cloud

This domain focuses on the hands-on aspects of working with HDFS (Hadoop Distributed File System) and cloud storage. The exam will test your ability to use Talend components to read from and write to HDFS, move data between different locations, and interact with cloud storage services like Amazon S3 or Azure Blob Storage. You’ll need to understand concepts like file formats (Parquet, Avro) and partitioning.

-

Real-world Use Case: A financial services company needs to move large volumes of transaction data from its internal database to a data lake on Amazon S3. The developer uses Talend jobs to extract the data, transform it into a Parquet format for efficient querying, and then load it into the S3 bucket.

-

Study Tip: Work through tutorials on using Talend components like tHDFSInput, tHDFSOutput, and tS3Put. Understand the difference between tHDFSInput and tHDFSRow and when to use each.

Domain 5: Managing Data using Hive

Apache Hive provides a SQL-like interface for querying data stored in Hadoop. This domain is critical for anyone who needs to perform analytics on large datasets without writing complex MapReduce code. The exam will test your knowledge of HiveQL, different Hive table types (managed vs. external), and how to use Talend components to execute Hive queries and load data into Hive tables.

-

Real-world Use Case: A marketing team wants to analyze web log data stored in HDFS. A developer uses a Talend job with the tHiveRow component to run a HiveQL query that aggregates user activity and calculates key metrics, making the data easily accessible for business intelligence tools.

-

Study Tip: Study the differences between managed and external tables. Practice creating and loading data into both types of tables using Talend Studio. This is a common area for TBDCDTS questions.

Domain 6: Manage Big Data Jobs

This domain is all about job orchestration and administration. It covers the lifecycle of a Big Data job in Talend Studio, from design and development to deployment and monitoring. You’ll need to know how to set up job dependencies, schedule jobs, and troubleshoot common errors. The exam may also cover the differences between local execution and remote execution on a cluster.

-

Real-world Use Case: A data pipeline consists of multiple jobs: one to ingest data, another to clean it, and a final one to load it into a data warehouse. A developer must orchestrate these jobs to run in a specific sequence, ensuring that the cleansing job only starts after the ingestion job has completed successfully.

-

Study Tip: Understand the different ways to execute a job (local, remote) and the tools available for scheduling and monitoring.

Domains 7-9: Advanced Topics - Spark and Streaming

The final three domains are where the rubber meets the road. These topics cover high-performance data processing with Apache Spark and the critical area of real-time data streaming.

Domain 7: Managing Spark in a Big Data Environment

Apache Spark is a unified analytics engine for large-scale data processing. This domain tests your ability to leverage Spark within Talend Studio to build high-performance data pipelines. You’ll need to understand key Spark concepts like RDDs (Resilient Distributed Datasets), DataFrames, and how to use Talend's Spark components (tSparkMap, tSparkSQL, etc.) to create efficient jobs.

-

Real-world Use Case: A telecommunications company needs to process terabytes of call detail records (CDRs) to identify network usage patterns. A developer uses Talend's Spark components to build a job that processes this massive dataset in parallel, achieving a significant performance boost over traditional MapReduce jobs.

-

Study Tip: Focus on the architectural differences between Spark and MapReduce. Learn the key Spark components in Talend Studio and how they map to Spark's core concepts.

Domain 8: Managing a Spark Cluster

Beyond simply using Spark, this domain requires you to understand how to configure and interact with a Spark cluster. You'll be tested on concepts like Spark's different deployment modes (Yarn, Mesos, Standalone), cluster resource management, and how to submit a Talend-generated Spark job to a cluster.

-

Real-world Use Case: A data scientist needs to run a machine learning model on a large dataset. The developer must configure the Talend job to submit to a Spark cluster running on Yarn, ensuring the job has access to sufficient resources (memory and cores) for efficient execution.

-

Study Tip: Review the different Spark deployment modes and their respective configurations. Practice configuring a Talend job to run in a distributed mode on a simulated or real cluster.

Domain 9: Streaming with Talend Big Data

The final domain covers real-time data processing, a critical skill in today's data-driven world. You’ll need to understand the concepts of streaming data, event-driven architecture, and how to use Talend's streaming components (e.g., tKafkaInput, tSparkStreaming) to build real-time data pipelines. This domain also touches on topics like micro-batch processing and handling late-arriving data.

-

Real-world Use Case: A social media monitoring company needs to analyze tweets in real-time to track a new product launch. A developer builds a Talend streaming job that reads data from a Kafka topic, processes it using Spark Streaming, and then stores the analyzed sentiment data in a real-time database.

-

Study Tip: Understand the core concepts of streaming data and the role of technologies like Kafka. Practice building a simple streaming job in Talend Studio to reinforce your understanding.

Preparing for the Qlik TBDCDTS Exam: A Strategic Approach

To effectively prepare for the TBDCDTS exam, you need more than just rote memorization. A strategic approach involves targeted study, hands-on practice, and a thorough understanding of the exam's format.

Recommended Study Resources Per Domain

-

Qlik's Official Training Materials: The most authoritative source. Qlik's learning platform offers courses that directly align with the exam blueprint. Qlik Learning Portal is your go-to resource for accurate information.

-

Talend Community and Documentation: The official documentation and community forums are invaluable for understanding specific components and troubleshooting.

-

Online Practice Exams: To build confidence and identify your weak spots, take a practice test that simulates the actual exam. This is the single most effective way to prepare for the TBDCDTS questions.

-

Hands-On Projects: Nothing beats practical experience. Build small projects that involve each of the nine domains. This will solidify your understanding and help you recall information more easily during the exam.

How to Allocate Your Study Time

The nine domains are not all weighted equally. Domains 1-3 are foundational and might have a smaller percentage of questions, but they are essential for understanding the rest of the content. Domains 4-9, which cover practical application and advanced topics, will likely have more questions.

A good rule of thumb is to spend roughly 20% of your time on the foundational domains and 80% on the practical, advanced topics.

-

Week 1-2 (Foundation): Focus on Domains 1-3. Build a strong theoretical base and practice configuring environments and metadata.

-

Week 3-4 (Application): Dive into Domains 4-6. This is the core of Big Data management. Spend a significant amount of time on practical exercises with Hadoop, cloud storage, and Hive.

-

Week 5-6 (Advanced Topics): Master Domains 7-9. Spark and streaming are high-demand skills, and the exam will reflect this. Focus on performance optimization and real-time data flows.

-

Final Week (Review & Practice): Spend this week taking the TBDCDTS practice exam to identify any remaining knowledge gaps. Revisit the official documentation for topics you struggle with.

Tips to Retain Domain-Specific Knowledge

-

Mind Maps: Create visual maps for each domain, connecting technologies (e.g., Spark, Hive) to their use cases and relevant Talend components.

-

Flashcards: Use digital or physical flashcards for key terms, definitions, and component names.

-

Teach a Friend: Explaining a concept to someone else is a powerful way to solidify your understanding.

-

Build a Portfolio: Create a small portfolio of projects that demonstrate your skills in each domain. This not only helps with retention but also serves as a valuable asset for job interviews.

Final Thoughts on the TBDCDTS Certification

Passing the Qlik Talend Big Data Developer Using Talend Studio certification is a testament to your skills and dedication. This exam is a comprehensive assessment of your ability to work with Big Data technologies and the powerful Talend platform. By following a structured and hands-on approach, you can confidently prepare for the exam and position yourself for career growth in the fast-paced world of data analytics.

Ready to test your knowledge and prepare for the actual exam? Our comprehensive TBDCDTS practice exam is designed to mirror the real test, helping you build the confidence and expertise needed to succeed. Start your journey today and become a certified Qlik Big Data developer.

Take the TBDCDTS Practice Exam Now!

The TBDCDTS Certification Exam: Quick Facts

-

Exam Name: Qlik Talend Big Data Developer Using Talend Studio

-

Code: TBDCDTS

-

Duration: 90 minutes

-

Questions: 55

-

Passing Score: 70%

-

Exam Fee: USD 250

This comprehensive guide, combined with focused study and practical experience, will set you on the right path to mastering the TBDCDTS exam and unlocking new career opportunities.